Rank-Biasing Attention Models

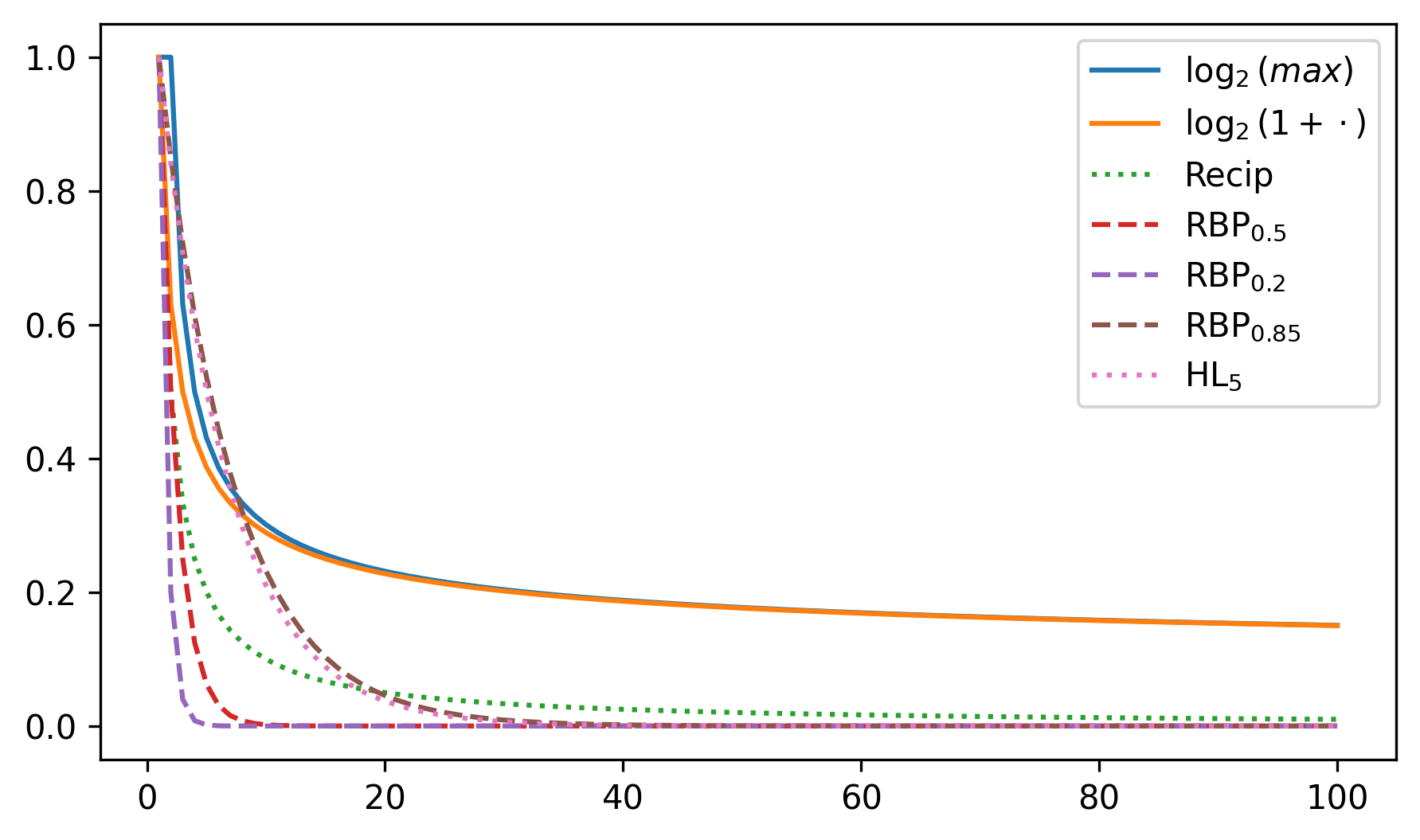

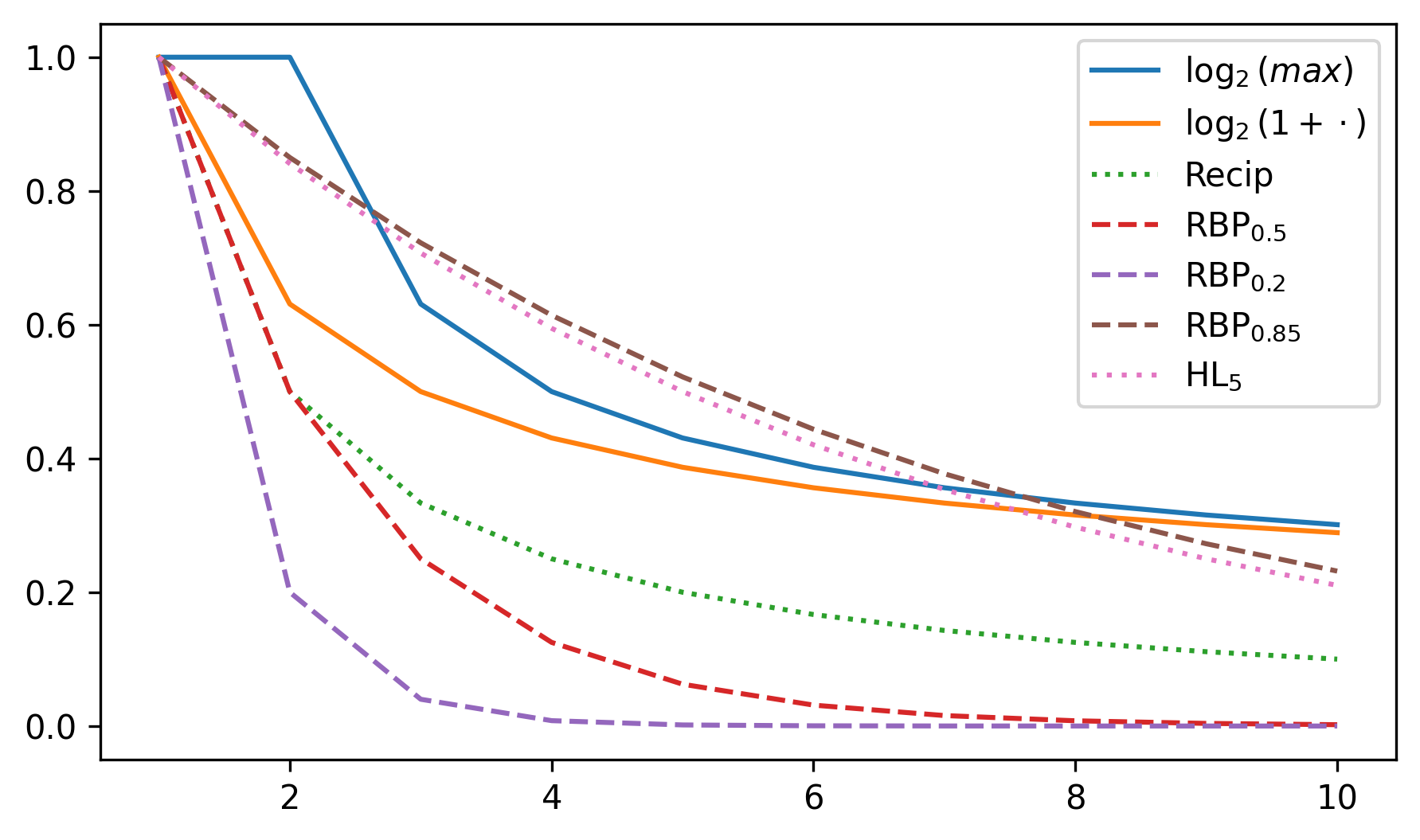

Rank-discounted metrics weigh recommended items by their ranking position using a discount or position attention (or exposure) model approximating the amount attention users pay to different ranking positions. Some metrics have a model inherent to their design, while others (such as RBP) have pluggable attention models.

This document describes the behavior of those attention models.

Core Attention Models

- Logarithmic discounting (typically base-2, used in NDCG)

- Reciprocal rank (used by MRR)

- Geometric cascade (default for RBP, which is reconfigurable)